Researchers have developed an algorithm that detects deepfakes portraits by looking at the eyes and the reflection of light in them. Computer scientists at the University of Buffalo have developed this tool. Here you can read the full study document.

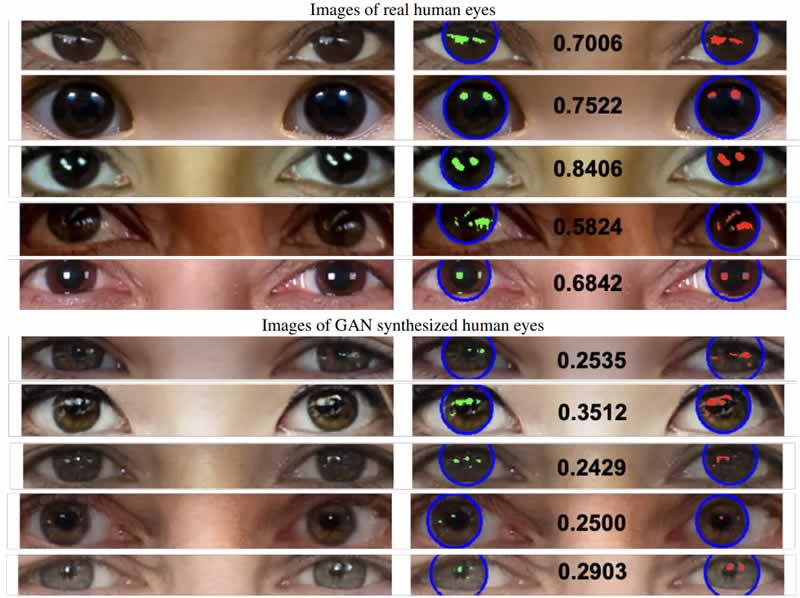

The key is in how the light is reflected in the eyes. This algorithm, with an effective rate of 94% in detecting deepfakes, analyzes the effects of light in the eyes of the subjects of the videos to determine if they are realistic or not.

This system analyzes, more specifically, the corneas of the eyes, which have a surface that reflects light in a similar way to how a mirror would. The idea is to determine if the reflective patterns of light are reflected in the same as real-world scenario.

Using logic, if we take as an example a photo taken of a person with a camera, we will see that the reflection of the eyes will be very similar in both since they are looking in the same direction. The deepfakes tend to skip the smallest details, as they are not able to achieve this kind of detail as variables.

Ironically, it is again the artificial intelligence system that this time is in charge of looking for the error, analyzing the face and the light reflected in each of the eyeballs, looking for these inconsistencies. Once it does, it generates a similarity score — the lower the score, the more likely the face in the image is a deepfake.

In addition to its functionality only being demonstrated in portraits, this AI is only capable of determining the aforementioned inconsistencies in the subject’s eyes. If these are not displayed, the system will not work.

In fact, if the subject does not look at the camera, it is likely that the system will deliver a false positive. Of course, this is all being investigated for future versions, but the important thing is that it will not be able to detect the most advanced deepfakes.