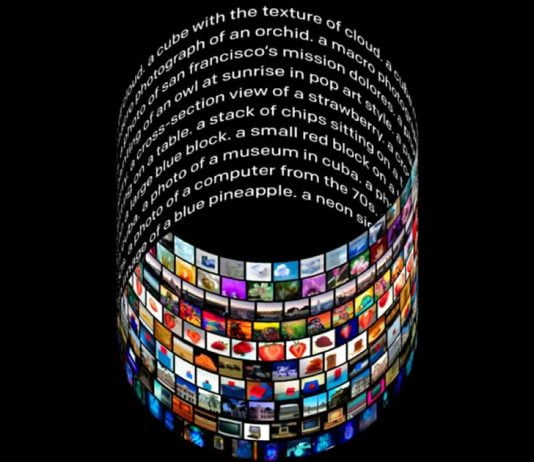

OpenAI, an artificial intelligence research company co-founded by Elon Musk and backed by Microsoft, has developed an AI system, DALL-E — capable of creating images from natural language texts.

The ability of DALL-E to convert words and sentences into images is done with the help of 12 billion parameters that are available to the system.

It is a neural network that lays its foundations in GPT-3 — the third-generation autoregressive language model based on deep learning introduced in May 2020 — to interpret texts, associate them with different visual concepts and create images. In some cases, the results are so good that they look like real photos.

The AI program uses predefined sentences to create images. These describe the arguments of the picture: for example, objects, their color, appearance, and shape, as well as the positions of elements in relation to one another. One of its most amazing attributes is that it can create objects that don’t even exist.

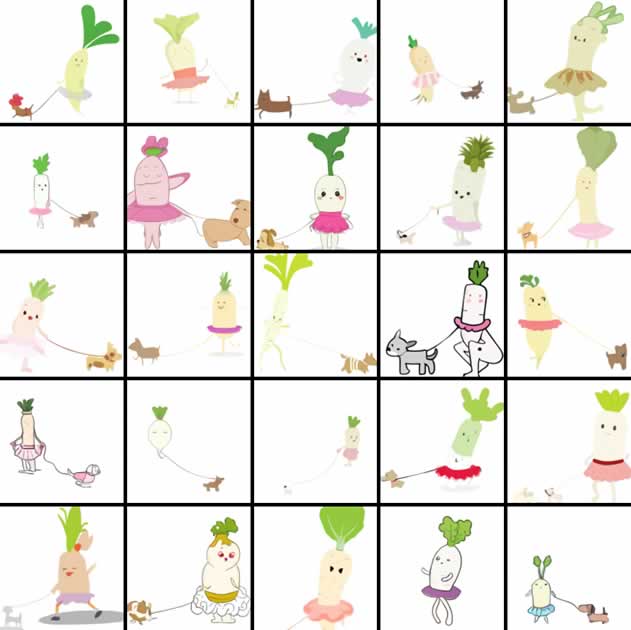

The system has the ability to combine concepts to create totally new ones. For example, if you want to see “a baby daikon radish in a tutu walking a dog,” your wishes will be commands. The artificial intelligence of DALL-E will present this vegetable to you while walking its pet. As if that were not enough, you will have a series of images for you to choose the most picturesque one.

Instead, suppose you ask for “a living room with armchairs and a painting of The Colosseum in Rome.” In that case, the artificial intelligence system will present you with a series of images based on their interpretations.

According to the OpenAI team, unlike 3D rendering engines, DALL-E can substitute details that are not explicitly stated before presenting the results.

OpenAI is one of the companies that has made the most progress in the field of artificial intelligence. One of its long-term goals is to build “multimodal” neural networks. The company ensures that DALL-E could better understand the world, thanks to its ability to learn different visual and textual concepts.