Meet EVA, a humanoid developed by a team of engineering researchers from Columbia University in New York City. Specifically, it is a robotic head that has been designed with the aim of exploring the dynamics of interactions between humans and robots. In this way, it is intended to eliminate the static expressions of other intelligent machines and provide a better experience in dealing with people.

Non-verbal language is a very important component when communicating with another person since facial expressions provide extra information that helps build bonds and trust. The world of robotics has always bet on designing robots that are as similar as possible to the human being, but until now, it had not been possible that they were capable of identifying and copying facial expressions and even expressing emotions.

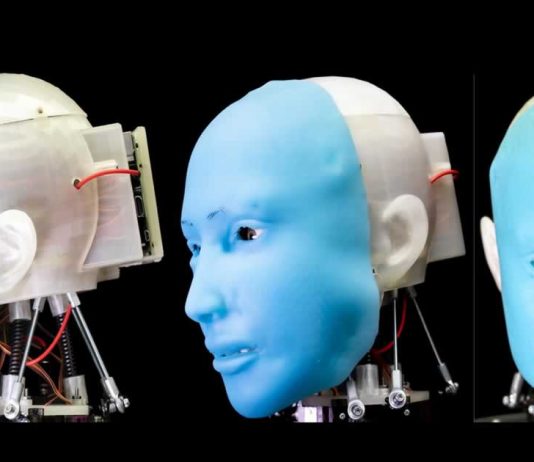

EVA consists of an adult-size synthetic human skull that is fully 3D printed and features a soft rubber face on the front. Inside it incorporates a series of motors that selectively pull and release the cables connected to various locations in the lower part of the face, allowing this robot to make facial expressions.

But that’s not all that this robot can do, as it is also capable of expressing a series of basic emotions — from anger to fear, disgust, joy, sadness and surprise. In addition, its creators have also ensured that EVA can express a series of more nuanced emotions.

In order to develop these capabilities, scientists began by recording EVA as she moved her face at random. Subsequently, a computer that controls the robot analyzed all the recordings and, using an integrated neural network, managed to find out which facial expression corresponded to each of the combinations of the movements of the machine.

The next step in the testing of this research was to connect a camera that recorded the image of the face of a person interacting with the robot. A second neural network was then used to identify the expression that this individual made to match one of those that EVA was capable of. At this point, the robot itself managed to identify and copy that expression by moving its ‘facial muscles’.

Despite being still a laboratory experiment, the researchers of the robot assure that the imitation of human expressions may have limited applications but that, nevertheless, they can be a good way to help in the way in which the human being interacts with the technology, specifically with assistance robots.