YouTube has taken a significant step to combat misinformation and deceptive content fueled by artificial intelligence. YouTube will soon require creators to alert viewers about videos containing “synthetic” content — those created or manipulated using generative AI tools. This policy will encompass both traditional long-form videos and the increasingly popular Shorts.

The implementation timeline for this new policy is not yet concrete, but YouTube’s official blog indicates that these content alteration warnings will start rolling out in the coming months, with a more prominent presence expected in 2024. The platform is working closely with YouTubers to ensure they are well-informed about these new requirements and guidelines.

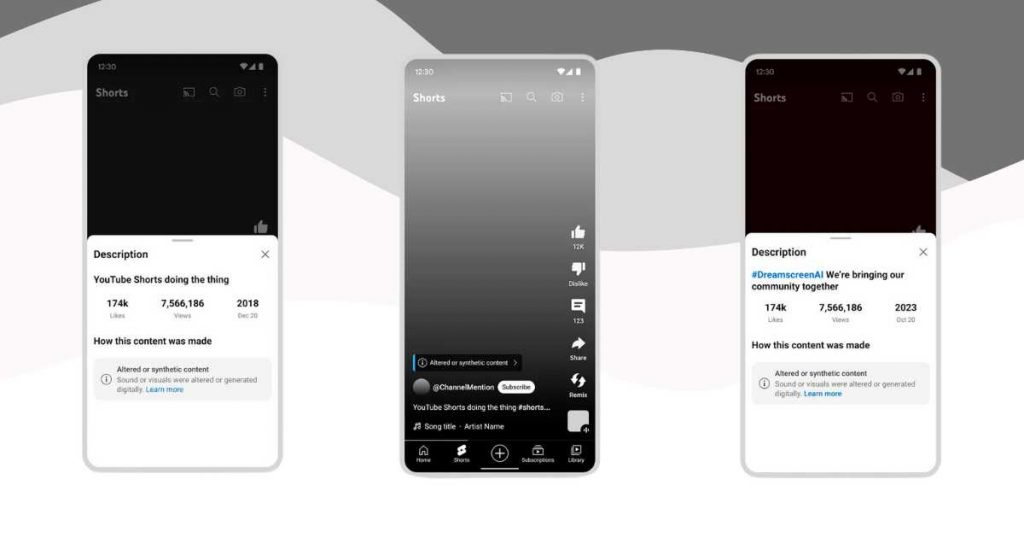

Once this feature is available, creators will need to indicate during the upload process if a video has been manipulated using AI. YouTube will then display two types of warnings: one in the video description box and another directly on the video player. Both will carry a message stating, “Altered or synthetic content. The sound or images have been altered or digitally generated.”

However, it’s important to note that the warning on the video player will be limited to synthetic content related to sensitive topics prone to misinformation, such as public health crises, ongoing armed conflicts, events involving public officials, or electoral processes.

YouTube’s initiative is a compelling approach to add an extra layer of control over the spread of misleading or outright false content. However, there’s a possibility that some creators, especially those whose primary motive is to disseminate disinformation, might choose not to mark their videos as altered, despite them being so. Therefore, Google will need an effective system to enforce these new guidelines.

Creators who fail to mark their AI-manipulated videos accordingly will face various penalties, ranging from content removal to suspension from YouTube’s Partner Program. The specifics of these sanctions, such as whether they apply after a certain number of violations, are not detailed, but they will occur in cases of “consistent” non-compliance.

It’s also crucial to mention that these new synthetic content warnings do not replace YouTube’s community guidelines. This means that YouTube can still remove inappropriate AI-manipulated content, even if it includes the required warning.

Lastly, YouTube’s policy won’t just apply to videos manipulated with third-party software. As Google is a leading force in AI and is gradually integrating it into its products, the warnings will also cover videos incorporating elements created with its tools, like Dream Screen, a utility for generating AI-based images and videos for use as backgrounds in Shorts.