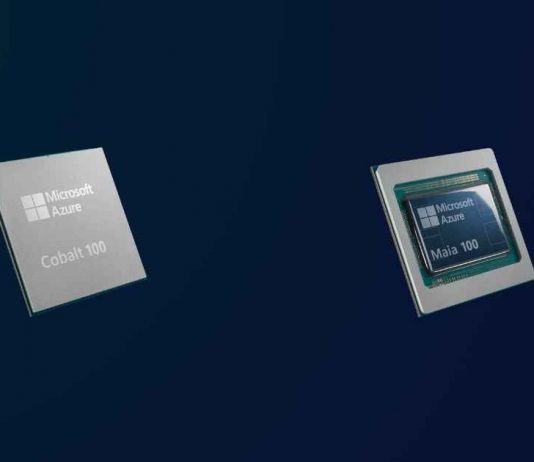

Microsoft has announced the introduction of two custom-designed chips, Azure Maia AI Accelerator and Azure Cobalt CPU, set to be integrated into its Azure cloud environment by 2024.

Azure Maia is a custom AI accelerator chip, specifically developed for cloud-based training and inferencing of AI workloads. This includes support for advanced models like OpenAI, Bing, GitHub Copilot, and ChatGPT. This development underscores Microsoft’s focus on enhancing the capabilities and efficiency of AI processing in the cloud, a critical area given the increasing reliance on AI technologies in various sectors.

Complementing Azure Maia is Azure Cobalt, a cloud-native CPU based on the Arm architecture. It’s optimized for performance, energy, and cost efficiency in handling general workloads. This chip represents a versatile solution for a wide range of cloud computing needs, balancing the specialized AI focus of Azure Maia with broader applications.

While detailed specifications of these chips are still sparse, it is known that the Cobalt CPU is based on the Arm Neoverse N2 architecture, featuring 128 cores and a 12-channel memory interface. This design is expected to deliver a 40% performance boost over its predecessor. The Maia chip is produced using TSMC’s 5-nm manufacturing process and incorporates CoWoS packaging technology, along with four HBM chips.

Microsoft is not only focusing on in-house chip development but also expanding its partnerships with leading hardware providers like AMD and Nvidia. This includes the integration of AMD Instinct MI300X accelerated virtual machines into Azure, enhancing AI workload processing capabilities. Additionally, Microsoft is launching new virtual machine series optimized for Nvidia’s H100 and the upcoming H200, focusing on AI training and inferencing.