The recent project of OpenAI shows how AI evolved itself to perform certain tasks.

In the experiment, OpenAI assigned AI agents to play hide-and-seek — not one, not two but 481 million rounds of the game. But the results were shocking and promises developing more sophisticated artificial intelligence.

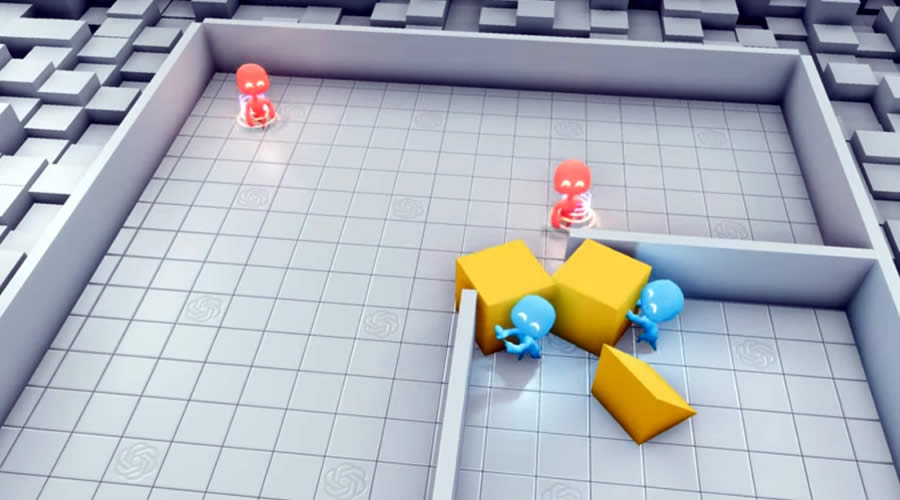

In the new simulated hide-and-seek environment, agents play as two teams. Where hiders (blue) are tasked with avoiding line-of-sight from the seekers (red), and seekers are tasked with keeping the vision of the hiders. Also, there are objects scattered throughout the environment that hiders and seekers can grab and lock in place, as well as randomly generated immovable rooms and walls that agents must learn to navigate.

Before the game begins, hiders are given a preparation phase where seekers are immobilized to give hiders a chance to run away or change their environment.

The experiment builds on two existing ideas in the field: multi-agent learning — the idea of placing multiple algorithms in competition or coordination to provoke emergent behaviors, and reinforcement learning — the specific machine-learning technique that learns to achieve a goal through trial and error.

OpenAI published their initial results shows how AI agents learn to play the simple children’s game from scratch and progressively become so proficient that they even invent six distinct strategies to play the game — without any supervision or assistance from the programmer.