Apple will introduce a new cryptographic monitoring system, CSAM (Child Sexual Abuse Material) scanning, with iOS and iPadOS to limit the distribution of child pornography on services like iCloud.

As per the report, Apple would begin scanning photos stored on the iPhone and iPad for images of child abuse while maintaining the security and privacy of users.

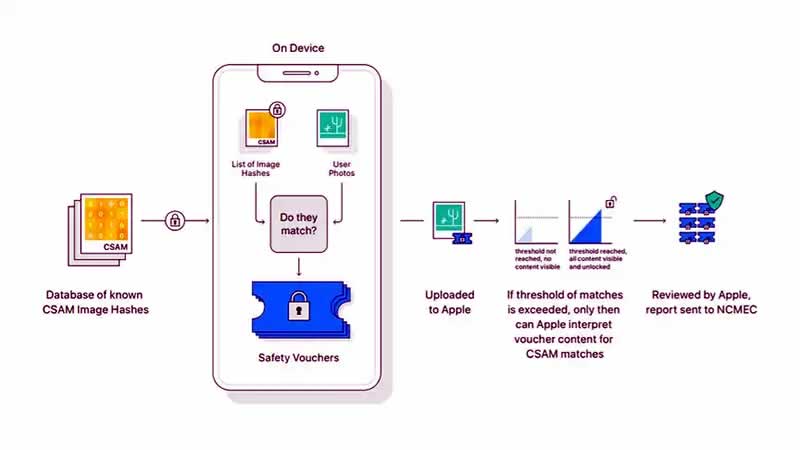

Crypto specialist Matthew Green points out that Apple’s tool would use a hashing system to detect illegal material and will run on the client-side, that is, locally on the iPhone. Precisely, hashes are cryptographic functions that allow you to compare files without opening them. These are basically blocks of indecipherable letters and numbers that provide a unique value for each document.

The CSAM scanning technology is very reminiscent of Microsoft’s Photo-DNA, which also checks photos and videos for child pornographic content via a database — but on the servers of Google, Facebook, Twitter and Microsoft and not on the users’ devices themselves. However, Apple’s new CSAM scanning technology has attracted some criticism from security experts around the world.

According to Apple, the hash database comes from the US non-governmental organization NCMEC (National Center for Missing & Exploited Children) and other unspecified child protection organizations, is integrated directly into iOS and updated with its operating system updates.

Apple stores the analysis result — positive or negative — in a “cryptographic safety voucher”. This is loaded into the iCloud together with the respective picture. The result of the analysis should be stored in such a way that neither Apple nor the person concerned can see it.

Only when a threshold set by Apple from several analyzes or vouchers stored in the iCloud is exceeded does the system sound an alarm. If the system sounds an alarm, it will be checked manually by Apple.

If child pornography is discovered, the victim’s iCloud account is blocked and a report is sent to NCMEC. The NGO can then call in the law enforcement authorities. Apple does not want to explicitly inform those affected, but they can object to the blocking of their accounts.

Apple also ensures that parents will be able to receive alerts if their children want to send content classified as suspicious.