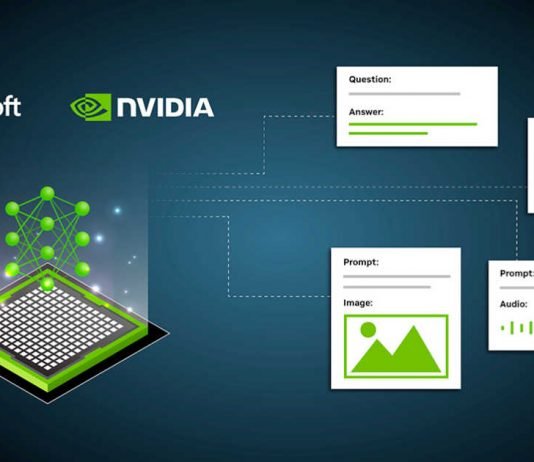

NVIDIA and Microsoft have recently joined forces in an exciting partnership aimed at powering Windows 11 applications that utilize generative AI.

Leveraging the capabilities of NVIDIA’s RTX GPUs, which feature specialized “Tensor Cores” designed to handle AI-based software, the collaboration between these two tech giants will accelerate AI development and bring the power of AI to the next generation of Windows programs.

The partnership entails several key advancements that will empower developers to harness the potential of AI in their Windows 11 applications. One of the notable developments is the establishment of a framework for AI optimization and deployment, enabling developers to easily integrate AI capabilities into their software. Microsoft and NVIDIA will also provide tools specifically tailored for building AI applications on Windows PCs, ensuring a seamless development experience for AI developers.

Traditionally, AI development has primarily been carried out on Linux-based systems, leading developers to either dual-boot their machines or rely on multiple PCs to work on AI development while still accessing the Windows ecosystem. In an effort to enhance developer convenience, Microsoft has been working on the “Windows Subsystem for Linux (WSL)” feature, enabling Windows to run the Linux operating system within Windows itself.

NVIDIA has closely collaborated with Microsoft to provide GPU acceleration within WSL, along with support for the entire NVIDIA AI software stack. This means that developers can now utilize their Windows PCs for all their local AI development needs, leveraging GPU-accelerated deep learning frameworks on WSL.

NVIDIA has made significant strides in improving the performance of Tensor Cores, making them particularly well-suited for AI inference tasks. The company’s driver development focus centres around enhancing GPU efficiency, as exemplified by programs like Stable Diffusion.

In line with this commitment, NVIDIA has announced plans to release the 532.03 optimization driver, which, when combined with Olive’s optimized models, will deliver a more than 2x improvement in AI performance. This optimization driver is especially crucial for laptops, as AI becomes increasingly prevalent in almost every Windows application. NVIDIA is also set to introduce new Max-Q low-power inference capabilities for dedicated AI workloads on RTX GPUs, aiming to optimize Tensor Core performance while minimizing power consumption. This will extend battery life, keeping systems cool and quiet.