The field of Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) — three different concepts contained in the broader category of synthesized reality — is at the center of a fervent research and development activity in Apple’s laboratories, which has long indicated it more or not as clear as the “next big thing” of the near future.

Patents filed by Apple periodically emerge that cover concepts and technologies that could be used in future products — the contingent is always used in these cases because many patent applications are filed, often only for the protection of intellectual property, and obviously not all of them find the concrete application.

However, sometimes some documents are worthy of attention since they can represent a clue of what the Cupertino company has in mind when it comes to Synthesized Reality (SR). Today we take a closer look at three of such Apple patents.

Apple Glass that identify the source of a sound

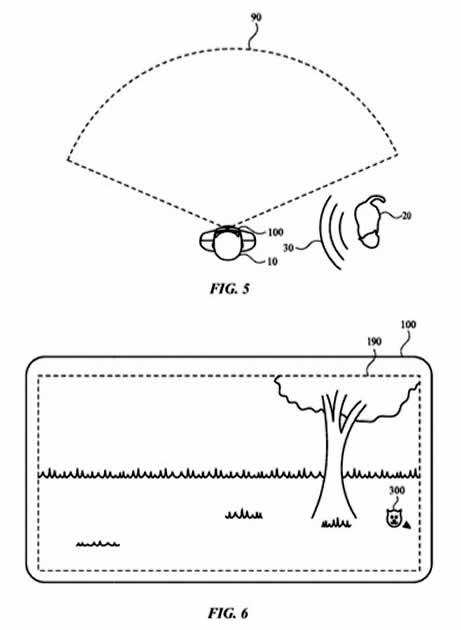

The first patent application put forward by Apple and which we examine is for a technology capable of detecting the sounds of the environment in which the user is immersed and informing him of their origin.

The patent application entitled “Audio-based Feedback For Head-mountable Device” does not refer specifically to a device, but it is natural to think that it could be something designed for an augmented reality device — like the phantom Apple Glass.

“A head-mountable device can include multiple microphones for directional audio detection. The head-mountable device can also include a speaker for audio output and/or a display for visual output. The head-mountable device can be configured to provide visual outputs based on audio inputs by displaying an indicator on display based on a location of a source of a sound” — the patent reads.

The patent which does not go into particular details but which nevertheless offers some clues on which to develop hypotheses. We can therefore imagine the Apple is caressing the idea of creating an augmented reality device capable of “increasing” not only what the user sees but also what he hears.

The patent application is signed by three engineers, Killian J. Poore, Stephen E. Dey, and Trevor J. Ness, who also signed another previous patent application relating to technologies capable of improving the user’s vision in low light conditions via an AR device.

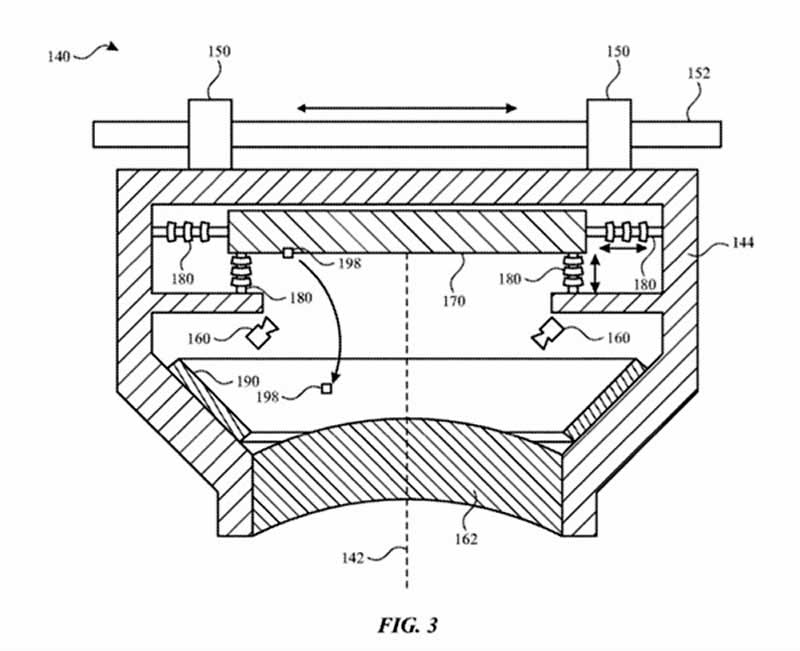

Automatic cleaning system for the lenses of an AR viewer

Another patent application that arouses interest, namely that relating to a technology that can allow automatic cleaning of the lenses of an AR device, thus improving the user’s visual comfort.

The idea of Apple, with its “Particle Control For Head-mountable Device“, is, therefore, to equip the device with a dust removal system and the easiest way to do this is through vibrations, exactly as it happens with sensor cleaning systems integrated in modern digital cameras. In this way, the system could start operating periodically or when it detects certain conditions or even the following command from the user.

“A head-mountable device can provide an optical module that removes particles from an optical pathway and captures the particles, so they do not interfere with the user’s view of and/or through optical elements. For example, the display element and/or another optical element can be moved in a manner that releases particles on a viewing surface thereof. The optical module can include a particle retention element that securely retains the particles so that they remain outside of the optical pathway. Such particle removal and retention can improve and maintain the quality of a user’s visual experience via the optical module.” — reads the patent application.

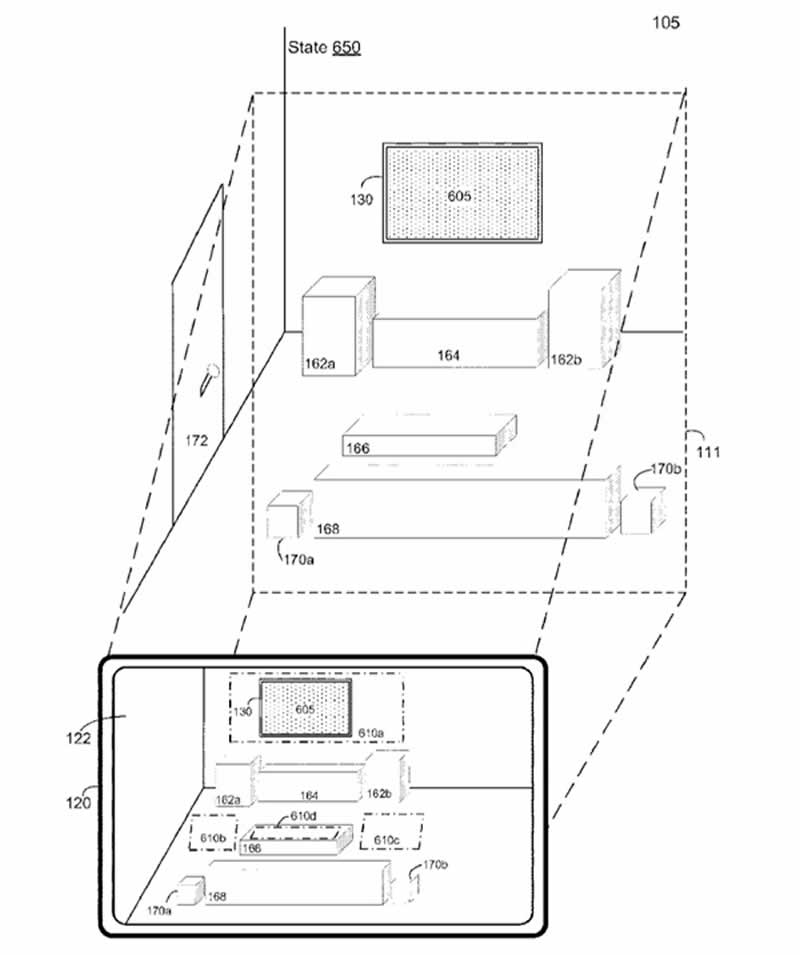

LiDAR and synthesized environments: immersion objective

The third patent we are talking about today concerns the technologies and techniques that could be used to recreate the environments of synthesized reality.

In the document “Method And Device For Tailoring A Synthesized Reality Experience To A Physical Setting,” Apple describes a method for obtaining data and information on the environment where the user is, including the spatial information of a “volumetric region around a user.” Based on this information, a map would then be created using LiDAR technology to be used to compose or customize a synthesized reality experience.

Apple seems to have a very imaginative vision of this thing, at least paying attention to what we read in the patent application — “For example, while a user is watching a movie in his/her living room on a television, the user may wish to experience a more immersive version of the movie where portions of the user’s living room may become part of the movie scenery“. The example is then further expanded by citing the projection of parts of the film on walls or floors or the viewing of maps, graphics or additional information.

Although such a technology may be primarily aimed at AR/VR/MR devices, Apple specifies that this concept could also be applied to tablets and smartphones, and in general, anything that has a display and can provide contextual elements of SR experiences.

Finally, the document also mentions the possibility of interacting with the synthesized environment and issuing commands — vocal or gestural — to modify it.