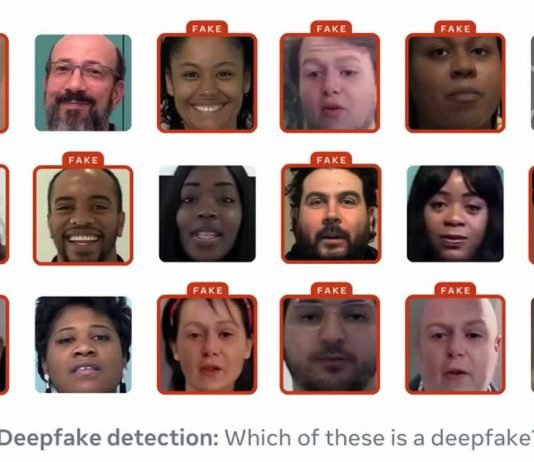

The Facebook AI team, in collaboration with Michigan State University, has presented a research approach “of detecting and attributing deepfakes” that is based on “reverse engineering from a single AI-generated image to the generative model used to produce it.”

This technology can be useful for Facebook to track the distributors of deepfakes – both simple disinformation and pornographic images — on its social networks. The development of the tool has not yet been completed and it is not yet ready for deployment.

According to Facebook, previous discussions have been limited to finding out whether a picture is real or whether it was created as a deepfake. The approach followed by the researchers is what is known as reverse engineering, which aims to uncover the “unique patterns behind the AI model used to generate a single deepfake image.” When comparing several images, it can also be determined whether they may have been created with the same model.

The team initially relies on the recognition of so-called fingerprints. These can also be found in a similar way in non-generated images that reveal the camera model used or the sensor. According to the researchers, such unique patterns in the image are also left behind by the generative machine learning models that generate the deepfakes.

The approach pursued also tries to derive individual properties of the models actually used from the fingerprints in order to draw conclusions about their network size and thus their architecture. This differs in different models so that they can be recognized and kept apart. According to the explanation, this already works in the first attempts.

Research leader Tal Hassner said that identifying the traits of unknown models is important because the software for creating deepfakes is easy to customize. This potentially allows attackers to cover their tracks if investigators try to trace their actions.

With its research, Facebook hopes to create tools for third parties “to better investigate incidents of coordinated disinformation using deepfakes, as well as open up new directions for future research.”