Nvidia has partnered with Amazon Web Services (AWS) to launch what is now the fastest cloud AI supercomputer. This cutting-edge system utilizes an impressive 16,384 GH200 GPUs, known as the Grace Hopper Superchip, setting a new benchmark in high-performance computing.

Since their collaboration began in 2010, Nvidia and AWS have both seen significant growth. Their latest venture is a strategic move to maintain their leadership positions in the rapidly evolving AI industry. The GH200 chip, which has recently seen a surge in demand, is expected to be deployed in large-scale systems globally.

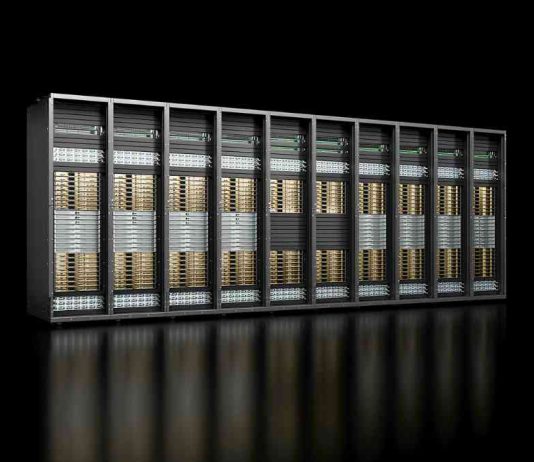

This supercomputer, part of Project Ceiba, is based on Nvidia’s innovative “GH200 NVL32 Multi-Node Platform.” The design features 32 GH200 GPUs arranged in 16 trays within a single rack, accompanied by nine trays dedicated to NV-Link switches. The system’s liquid-cooled design ensures optimal performance in a compact space and will be offered as an Amazon EC2 instance. Project Ceiba, located in an AWS data centre in the United States — primarily serves Nvidia as a DGX cloud deployment platform for developing future solutions.

AWS customers will have access to a variety of Nvidia solutions, ranging from the GH200 racks to upcoming new solutions featuring the smaller Nvidia L40S and L4 GPUs. This diverse range of products ensures that AWS and Nvidia can meet the needs of various customer segments.

The collaboration between Nvidia and AWS is a significant step in the AI and cloud computing sectors. By combining Nvidia’s advanced GPU technology with AWS’s robust cloud infrastructure, they are redefining the standards for AI supercomputing in the cloud.