NVIDIA CEO Jensen Huang made some groundbreaking announcements at Computex 2023 in Taipei, Taiwan, revealing the company’s latest advancements in the field of artificial intelligence and supercomputing. The most notable announcement was the full production launch of NVIDIA’s Grace Hopper Superchip, a powerful chip that will drive systems running complex AI programs.

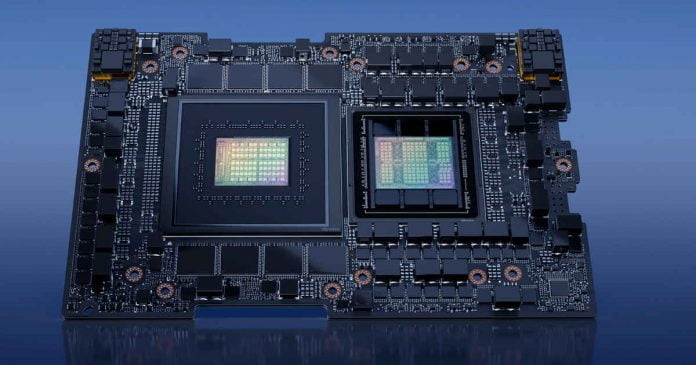

The Grace Hopper Superchip represents a significant leap forward in AI computing. It combines the Arm-based NVIDIA Grace CPU and Hopper GPU architecture using NVLink-C2C interconnect technology. With a staggering 200 billion transistors, the chip integrates a Grace 72-core CPU, Hopper GPU, 96GB of HBM3, and 512GB of LPDDR5X memory in a single package. This integration enables exceptional data bandwidth of up to 1TB/s between the CPU and GPU, providing substantial advantages for memory-bound workloads.

Building upon the Grace Hopper Superchip’s capabilities, NVIDIA also unveiled the new DGX GH200 supercomputer. The DGX series has become the gold standard for demanding AI and high-performance computing (HPC) workloads. While the current DGX A100 systems are limited to eight A100 GPUs, the DGX GH200 overcomes this limitation by leveraging a new NVLink switch system. This system incorporates 36 NVLink switches, enabling the combination of 256 GH200 Grace Hopper chips and 144TB of shared memory, effectively behaving like a massive, unified GPU. The third-generation NVLink switch system provides up to 10 times the GPU-to-GPU bandwidth compared to previous-generation systems, while the CPU-to-GPU bandwidth is boosted by a factor of seven. It also offers 5 times greater interconnect power efficiency and supports up to 128TB/s of bisection bandwidth.

In addition to the hardware advancements, NVIDIA introduced the MGX reference architecture, aimed at empowering original equipment manufacturers (OEMs) to develop AI supercomputers more efficiently. The modular design of MGX systems allows for the integration of various NVIDIA components, including CPUs, GPUs, data processing units (DPUs), and networking systems based on both x86 and Arm-based processors commonly found in today’s servers. This flexibility and scalability streamline the design and deployment process for AI-centric server solutions.

Moreover, NVIDIA showcased its new Spectrum-X Ethernet networking platform, a product of its acquisition of Mellanox. This platform highlights NVIDIA’s ability to optimize and fine-tune networking components and software to meet the demands of AI-centric environments. Marketed as “the world’s first high-performance Ethernet for AI networking platform,” Spectrum-X is specially designed to cater to AI servers and supercomputing clusters, offering superior performance and efficiency.

NVIDIA’s commitment to advancing supercomputing with the Grace Hopper Superchip is already yielding remarkable results. For instance, ASUS, a prominent computing vendor, has partnered with NVIDIA to build the Taiwania 4 supercomputer for Taiwan’s National High-Performance Computing Center. Equipped with 44 Grace CPU nodes, this supercomputer is expected to be one of Asia’s most energy-efficient when operational.

NVIDIA’s announcements at Computex 2023 showcased the company’s relentless pursuit of innovation in AI and supercomputing. The Grace Hopper Superchip, DGX GH200 supercomputer, MGX reference architecture, and Spectrum-X networking platform collectively demonstrate NVIDIA’s commitment to driving the future of AI computing and enabling groundbreaking advancements in various industries.