Recently Facebook has launched its first augmented reality glasses in collaboration with Ray-Ban. However, it appears that the company has an additional focus on this technology, and the proof of this is its new artificial intelligence project to develop AI systems that understand the world and how it works from a human perspective.

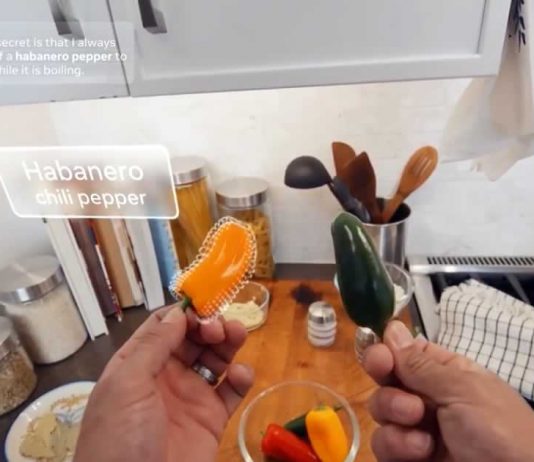

The new Facebook AI project, called ‘Ego4D‘, aims to teach AI “to understand and interact with the world like we do, from a first-person perspective.”

Until now, AI systems have been able to learn day-to-day routines to, for example, show us the fastest route to work. What Facebook intends to do, however, goes much further. Ego4D, still in development, is being carried out with a group of 13 universities around the world, where more than 700 participants have helped capture 2,200 hours of first-person video while performing tasks in their day-to-day lives.

Facebook AI, in collaboration with this consortium and Facebook Reality Labs Research (FRL Research), has developed five benchmarks focused on these first-person experiences that will drive progress in implementing these applications in the real world for future personal assistants.

- Episodic memory: What happened when? (e.g., “Where did I leave my keys?”)

- Forecasting: What am I likely to do next? (e.g., “Wait, you’ve already added salt to this recipe”)

- Hand and object manipulation: What am I doing? (e.g., “Teach me how to play the drums”)

- Audio-visual diarization: Who said what when? (e.g., “What was the main topic during class?”)

- Social interaction: Who is interacting with whom? (e.g., “Help me better hear the person talking to me at this noisy restaurant”)

Facebook hopes that the development around these five pillars will make possible the interaction of AI with people not only in the real world, but also in the metaverse, in which augmented and virtual reality are also part.

“Next-generation AI will need to learn from videos that show the world from the center of the action. AI that understands the world from this point of view could unlock a new era of immersive experiences, as devices like augmented reality (AR) glasses and virtual reality (VR) headsets become as useful in everyday life as smartphones,” says the Facebook AI team.

Kristen Grauman, a Facebook AI researcher, has highlighted to The Verge that “systems trained on Ego4D might one day not only be used in wearable cameras but also home assistant robots, which also rely on first-person cameras to navigate the world around them.”