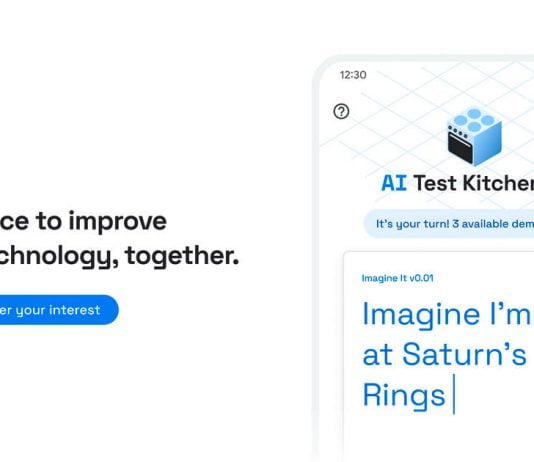

Earlier this year, Google announced the AI Test Kitchen app, which will allow users to interact with one of the company’s most advanced AI chatbots, LaMDA 2.

The researchers of the AI division of Google have announced that the registrations are now open to be able to request beta access to LaMDA 2 and that they will be gradually open to more and more users. Now the LaMDA 2 beta access is “begins to gradually roll out to small groups of users in the US, launching on Android today and iOS in the coming weeks.”

LaMDA 2 is the natural language processing model developed by the Mountain View giant and which had already carved out a place in the news in the past weeks — when a Google developer was first put on leave and then fired because he had publicly stated that there was a possibility that LaMDA (Language Model for Dialogue Applications) had gained consciousness to some extent.

The first set of demos to include AI Test Chicken will explore the capabilities of the latest versions of the LaMDA language, which has “undergone key safety improvements.” One such demo called “Imagine I It” lets you name a place and walk “paths to explore your imagination.”

Another demo, “List It,” allows the user to share a goal or tagline, and LaMDA will break it down into a list of useful subtasks.

And finally, the “Talk About It (Dogs Edition)” demo opens the possibility of having “a fun, open-ended conversation about dogs and only dogs, which explores LaMDA’s ability to stay on topic even if you try to veer off-topic.”

What Google intends with this app and these tests is to show users LaMDA 2’s capacity for creative response, this being one of the main benefits of the language model. Google warns that some answers “can be inaccurate or inappropriate,” which is common in chatbots open to audiences with harmful and hateful speech.

LaMDA 2, like many other chatbots, can produce “harmful or toxic responses based on biases in its training data, generating responses that stereotype and misrepresent people based on their gender or cultural background.” Something that, by the way, Google is investigating.