NVIDIA has announced the H100 NVL, a new variant of its Hopper GPU specifically designed for Large Language Models (LLMs) like OpenAI’s GPT-4.

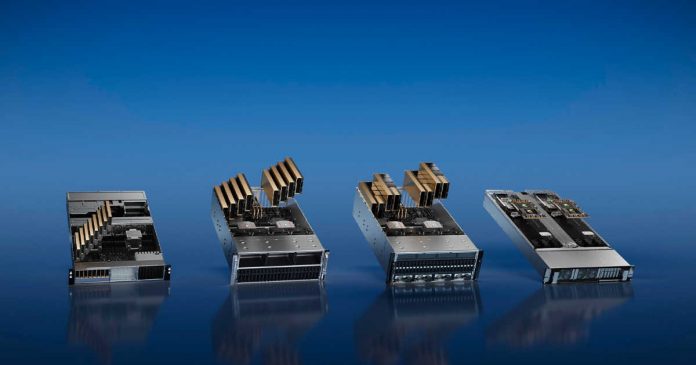

The dual GPU card has two H100 PCIe boards already bridged together and a large memory capacity, making it the most memory per GPU within the H100 family and of any NVIDIA product to date.

The H100 NVL is aimed at a single market of large-scale language model usage on the bandwagon and to further NVIDIA’s AI success. Large language models like the GPT family are, in many ways, constrained in memory capacity. Even the H100 accelerator fills up quickly to hold all parameters (175B for the largest GPT-3 model). Therefore, NVIDIA has developed new H100 SKUs with more memory per GPU than the regular H100 parts that cap at 80GB per GPU.

All GH100 GPUs come with six stacks of HBM memory (HBM2e or HBM3) with 16GB per stack capacity. However, due to yield concerns, NVIDIA only ships regular H100 parts with five of the six HBM stacks enabled, which means that each GPU has a nominal 96GB of VRAM, but the regular SKUs only have 80GB available.

The H100 NVL will be a special SKU with all six stacks enabled, offering 14GB of memory per GH100 GPU, a 17.5% memory increase. The dual GPU/dual card H100 NVL looks like the SXM5 version of the H100 arranged on a PCIe card. Regular H100 PCIe is being held back somewhat by slower HBM2e memory usage, fewer active SM/tensor cores usage, and lower clock speeds, but Tensor Cores NVIDIA is presenting for H100 NVL. The performance numbers are all on par with the H100 SXM5, indicating that this card isn’t further reduced like regular PCIe cards.

The H100 NVL’s big feature is its large memory capacity, as the dual GPU card offers 188GB of HBM3 memory (94GB per card). It also has a total memory bandwidth of 7.8TB/s, and 3.9TB/s on individual boards, making it the most memory per GPU within the H100 family and of any NVIDIA product to date.

The provision of this SKU is probably in anticipation of the explosive hit of ChatGPT and the rapid increase in AI demand due to the subsequent appearance of Bing and Bard. The price will go up accordingly, but given the booming LLM market, there will be demand to pay a high enough premium for a near-perfect GH100 package.

In conclusion, the H100 NVL is a new variant of NVIDIA’s Hopper GPU specifically designed for Large Language Models (LLMs) like OpenAI’s GPT-4. Its large capacity makes it the most memory per GPU within the H100 family and of any NVIDIA product. Its increased memory capacity will help to further NVIDIA’s AI success. The H100 NVL is a significant step forward in the LLM market, and it will be interesting to see how it performs when compared to other GPUs in the future.