As businesses adapt to the fast-paced digital world, reliable and robust IT support has become necessary to thrive.

That said whether you’re starting to build your IT infrastructure or want to upgrade your IT management and maintenance, you’ll be faced with two options–assembling an in-house IT department or outsourcing a managed IT service provider.

Are you wondering which of these two is the better option for your business? This article discusses the basics of each option and its pros and cons. Also, you’ll learn key points on when to hire a managed IT service or when it’s best to create an in-house team.

Understanding Managed IT Services

Managed IT services, also known as Managed Service Providers (MSPs), are third-party IT service providers that manage and take responsibility for providing a specific set of technology services to businesses.

A small- or medium-business can take advantage of their services on a subscription basis to improve technology-related processes to the same level as industry giants in their field. Depending on your needs, managed services can range from specific to general projects.

Common services often include the maintenance and monitoring of equipment, remote monitoring and server management, security and IT systems management, and other support services. Some IT firms like GoComputek Managed IT Services may also offer industry-specific IT support from healthcare to manufacturing to non-profit organizations.

The Benefits Of Managed IT Services

Cheaper

Outsourcing your IT needs to a third party may seem expensive, but it’s more cost-effective than hiring full-time IT employees. In the case of managed IT, you only pay for their services —no need to pay for employees’ monthly salary and benefits. You also don’t have to worry about providing them with the best tools and systems to do their job.

In comparison, the salary of a single full-time in-house IT expert can pay for a month’s worth of managed IT services, which include a team of specialists and the appropriate tools. While there are a variety of price methods, the majority of managed IT services are less expensive than developing an internal workforce.

Efficiency

Technology is constantly changing. Thus, you want an IT team that needs to adapt to these changes to support your business’s growth. You’re often limited to an employee’s experience and knowledge with an in-house team unless you decide to invest in their training.

This is not a problem with a managed IT company. As experts in the IT industry, any MSP looking to provide the best services for their clients will invest in their employee’s training and career advancement. As mentioned before, you also get an entire team of IT professionals with varying skill sets and expertise, from web developers to system analysts and cybersecurity specialists.

Scalable

Working with a managed IT firm makes it easier to scale your IT requirements as your business grows. With their pay-as-you-go service plans, you can easily upgrade your IT solutions and services during the busiest time of the year or scale down as needed.

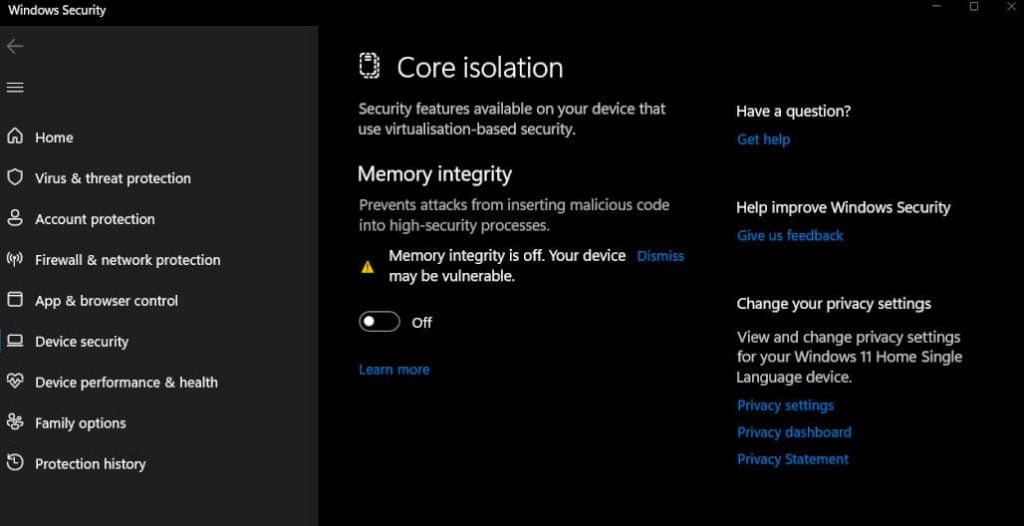

Secure

Technology offers convenience and makes your business more efficient. However, it also comes with inherent risks that can stop your company’s growth. If you want your company to thrive, you need to guard against evolving cyber threats.

One may think that outsourcing your IT processes to a 3rd party is not that safe. However, if you can’t hire a top-notch cybersecurity specialist for your team, managed IT services can better protect your business. They have the right skill set experience in most cybersecurity risks, and the right tools and resources to protect your business.

The Drawbacks Of Managed IT Services

Less Control

Compared to keeping your IT processes in-house, outsourcing to an MSP will prevent your company from having complete control over your IT infrastructure. When you work with managed IT services, you turn over the management of your network security, IT processes, and some data to a 3rd party company.

While they can keep you in a loop of emerging threats and significant updates, signing a contract with them means you have to rely on them to manage and protect your network.

Limited On-Site Availability

Unlike in-house IT, outsourced IT firms are often from a different area or even on the other side of the world. Since you don’t have access to them on-site, this may translate into longer response times. This can be problematic in case of an emergency. However, most providers do their best to provide the fastest response possible for their clients.

Also, minimum response time, per your service-level agreement (SLA), can assure you that your concerns will be addressed and resolved as soon as possible.

May Not Keep Up With Your Company Standards, Culture, And Ethos

Most companies have their own cultures, standards, and ethical codes. Unlike an in-house team working closely within your company, an outsourced 3rd party may not ensure that your company standards and values are upheld to your satisfaction. In addition, if the 3rd party services don’t follow or match your company culture, you may end up with an inferior service, or your partnership can quickly turn toxic.

Understanding An In-House IT Team

An in-house IT team is pretty self-explanatory. It means having an IT specialist or IT department inside your business premises. You’ll hire the team or individual and assign them to complete specific jobs, making it an ‘in-house’ operation.

The Benefits Of An In-House IT Team

Operational Control

An in-house IT is the best choice for businesses that prefer a hands-on approach to IT processes, data management, and cyber security. Since they’re like regular employees, they’ll be right in the office, and you can physically visit their department any time to ask questions or share concerns.

Having in-person communication with your IT specialist instead of an email or phone can be valuable for resolving issues quickly and ensuring peace of mind.

Business-Specific Expertise

While you can find MSPs offering industry-specific IT services, nothing beats the business-specific expertise that an in-house IT team develops. Over time, your in-house IT team will develop a deeper knowledge and detailed picture of your business’s internal systems and infrastructure.

By having an inside-out knowledge of your business’s IT infrastructure, an in-house team becomes better equipped to troubleshoot issues.

Customization

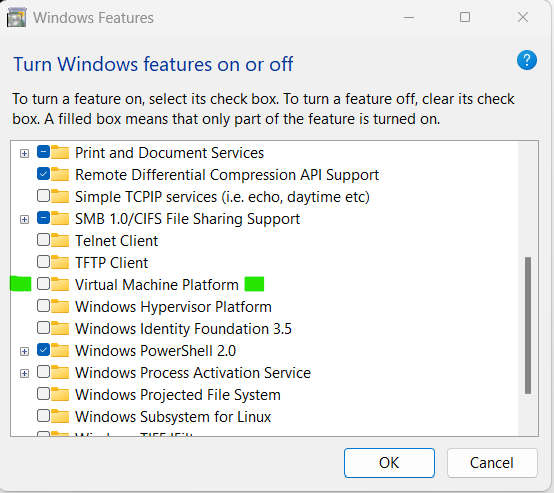

An in-house IT allows you to customize your team as you see fit. You can hire employees with the exact experience and qualifications you prefer. Since you have better knowledge of your company and its requirements, you know exactly how many IT employees will be enough to manage your business’s technology.

Also, you can customize all the software and hardware you’ll use. This includes everything from antivirus and firewalls to email filtering and servers.

The Drawbacks Of An In-House IT Team

Expensive

Building an in-house IT team from the ground up can quickly add up to your overhead expenses. Like any other employees, you’ll need to pay them monthly salaries and provide them benefits like sick leaves. Plus, you also need to pay for all the equipment they need to do their job and the necessary software to maintain your business network.

Off-The-Clock-Issues

While an in-house team offers you on-site availability, they may not be available 24/7. Assuming your IT specialist works an average of eight hours a day, five times a week, who will maintain and monitor your business network during the nights, weekends, and even holidays?

Also, if an unexpected issue should occur when your IT team members become unavailable, it can cause severe consequences on your business’s productivity.

Employee Turnover

Another issue that occurs with the in-house IT team is when a team member resigns. You’ll need to find a replacement quickly to maintain the level of IT management and support your company’s needs.

Recruiting takes months and can be expensive, especially if you’re looking for an IT expert with enough experience and knowledge. After they’re hired, they’ll also need to learn more about your systems and undergo training to get up to speed with your business’s network and IT processes.

To Outsource Or To Build A Team?

Depending on your company’s needs, either option can be a good fit.

If you have the budget and want better control of your IT processes and data, an in-house IT is the best option. Other circumstances that call for an in-house team instead of outsourcing include:

- You have highly sensitive data you don’t want to share to 3rd party providers

- You’re using custom software and applications or proprietary technologies

- You have a large employee count that requires in-person IT support and assistance

Now, if you’re a startup with a limited budget and prefer to skip the stress of finding the right IT specialists for your business, you should outsource to a managed IT service provider. In addition, there are some situations when outsourcing your IT management is the clear winner. These include:

- You have short-term needs

- You don’t have the right in-house specialist or experts to tackle an IT issue or project

- Specific tasks are too time-consuming or repetitive

- You want your existing team to focus on the core business IT needs

- You want a project to be completed as quickly as possible

Takeaway

Technology has become a significant contributor to the growth of modern businesses. As technology advances, businesses need a reliable team–whether outsourced or in-house–to help manage and maintain their IT infrastructure and stay relevant in this competitive world.